AI x Voice x Live2D Integration in Web Applications

Expressive AI Avatars for Enhanced User Experience

Roles: Product Design, UI/UX Design, Development

Recently, our company has been engaged in numerous discussions about the ideal interface for AI systems. We believe that the interface is just as crucial as the AI technology itself, varying based on the product and its users.

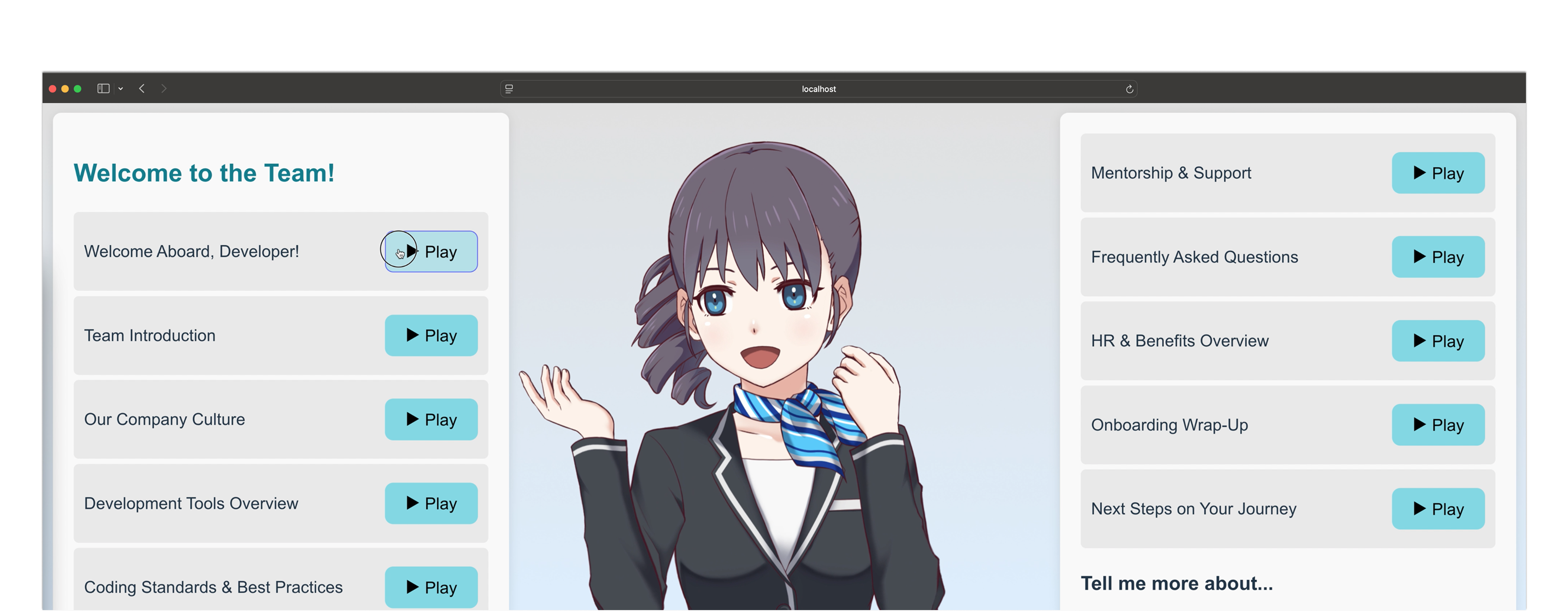

Through our experimentation with various avatar interfaces including 3D and 2D options, we've found Live2D to be particularly compatible with AI and web services - user-friendly, easy to implement, and highly effective.

Live2D is a platform that creates rich, 3D-like expressions by rigging multi-layered 2D illustrations, a process where deformation points are strategically placed across the artwork to enable realistic movement and animation.

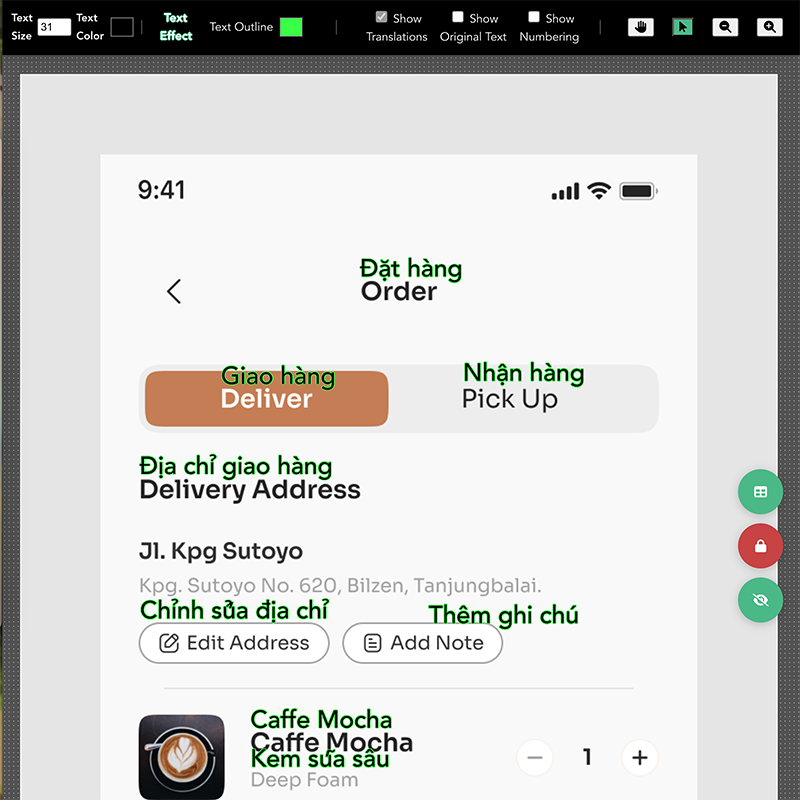

We began experimenting with integrating Live2D as an AI interface in web applications. Live2D provides the Live2D Cubism SDK for Web, which allows for seamless web implementation. Using the Live2D Editor, everything from simple sketches to detailed illustrations can be transformed into interactive characters (Live2D models).

Our team modified the SDK to work with the Vue.js platform. Additionally, we enhanced the lip-sync functionality to work more seamlessly with various Text-to-Speech (TTS) platforms, enabling the mouth to synchronize with speech even in audio formats beyond WAV.

Please check out our demonstration video:

While we cannot share the code for this demo as it's largely based on the Live2D Cubism SDK for Web, we've developed an optimal solution for integrating Live2D with Vue.js, a popular web application framework.

The demo uses the standard animated character included with Live2D Cubism SDK for Web, but Live2D's expressive capabilities extend far beyond this. It can create nearly 3D-model-like movement with more sophisticated illustrations, or bring charming, soft cartoon-like characters to life with surprisingly rich expressiveness.

At Goldrush Computing, we're committed to creating new user experiences by combining intelligent AI conversations, advanced natural voice synthesis engines, and Live2D. If you're interested in designing AI user experiences that consider both AI logic/data and interface elements from the ground up, please feel free to contact us.

Live2D Model Key Features

- Expressive gestures:: Natural movements like smiling, frowning, and blinking that respond to conversation

- Real-time lip-syncing:: Mouth movements perfectly synchronized with audio

Benefits for Product Designers

- Emotional connection: Characters with natural reactions deepen user bonds

- Enhanced brand recognition: Unique digital characters make your brand stand out

- Improved user retention: Engaging visuals and smooth interactions maintain user interest

Real-World Applications of AI-Powered Avatars

- NTT XR Concierge: AI-powered avatars deliver multilingual customer support. See more on Live2D’s Official Website.

- CHELULU by Sony Music Solutions: Uses advanced voice recognition and Live2D animation for engaging interactions. More details on Live2D’s Official Website.

Application Areas

- E-Commerce: Virtual assistants recommending products in real-time

- Healthcare: Animated characters providing emotional support to patients

- Entertainment: Virtual influencers and hosts creating new digital experiences

- Customer Service: Digital avatars fostering brand loyalty through engaging interactions

- Informational/Guide Websites: Interactive avatars simplifying application processes and improving usability

Addressing Common Concerns

1. "Is implementation expensive?"

Live2D is a cost-effective alternative to full 3D modeling. Review the Live2D License Agreement for details.

2. "Can it work across different industries?"

From casual characters to formal assistants, Live2D can be adapted to match your brand's tone.

3. "How do we start integrating this?"

We recommend considering AI, TTS (voice), and Live2D together from the early phases of product design.

Disclaimer

We recommend envisioning AI, TTS (voice), and Live2D as a unified whole from the early phases of product design, with cross-functional teams collaborating on the complete experience rather than working in isolation on AI logic, voice synthesis, or Live2D animation design separately.

Genre:

AI, OpenAI, Chatbot, Digital Avatars, Animation, Vitual Assistants, Web Development

Year:

2024

Related Works

Building a Topic-Specific AI Chatbot with RAG

Roles: New Business Planning, UX Design, RAG Architecture, Development

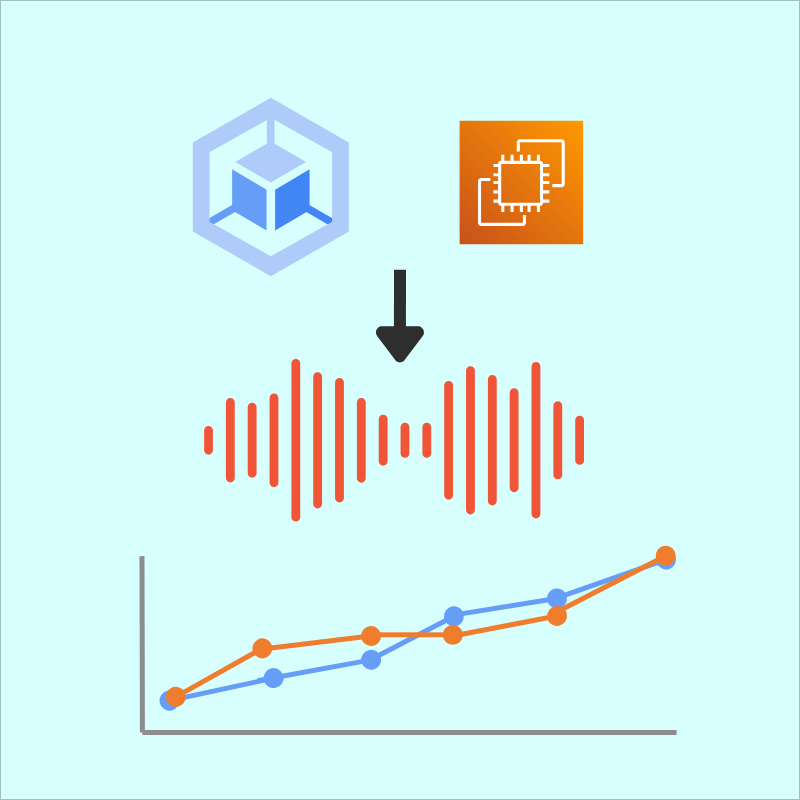

Measuring and Comparing ML Model Inference Performance on AWS and GKE

Role: Infrastructure Build, Performance Measurement, and Optimization