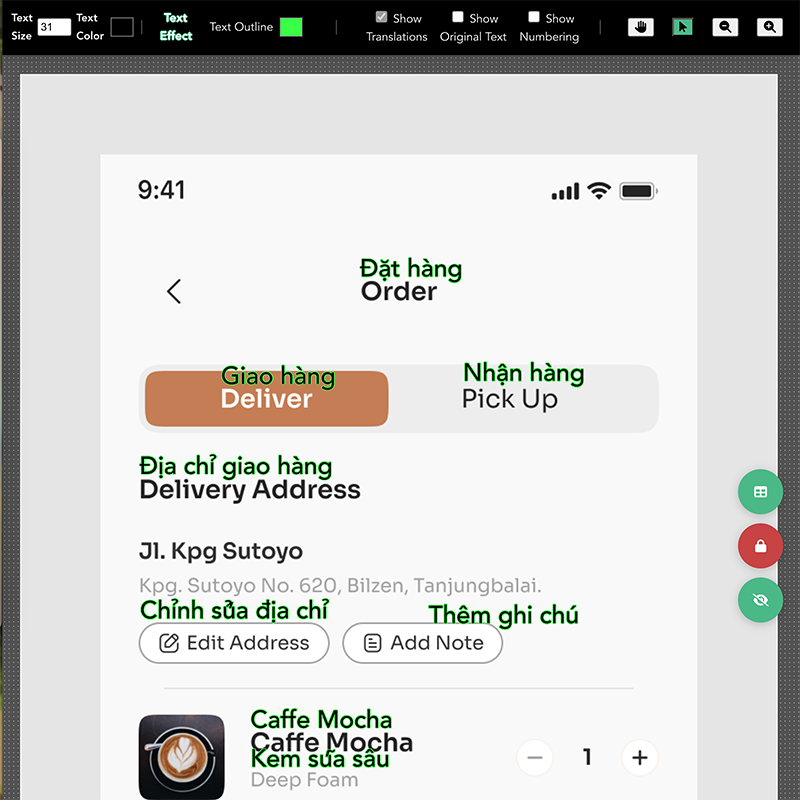

Optimizing the Execution Environment for a Speech Synthesis ML Model

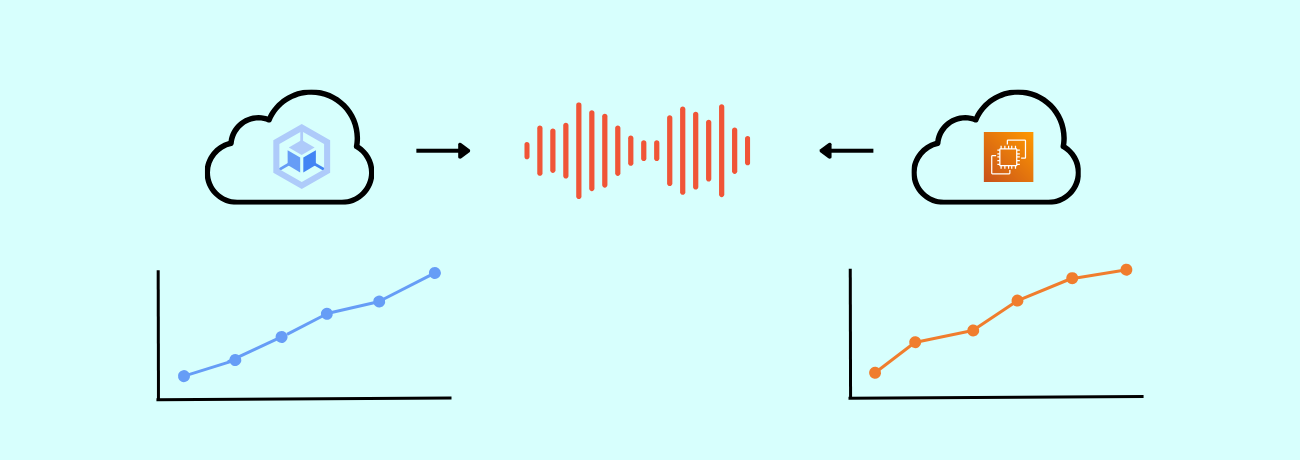

Measuring and Comparing ML Model Inference Performance on AWS and GKE

Role: Infrastructure Build, Performance Measurement, and Optimization

As part of a broader project, we designed and built an execution environment for a speech synthesis machine learning model, conducting detailed performance comparisons on AWS GPU instances and Google Kubernetes Engine (GKE). By evaluating cost, response times, and resource utilization, we identified and deployed an infrastructure configuration that best meets both performance and budgetary requirements.

DevOps & Backend API Development

Established DevOps practices and built backend APIs to enable seamless cloud-based ML model operations.

Performance Benchmarking

Measured the API response speed for speech synthesis across various machine types and regions to pinpoint optimal resource configurations.

Cost Analysis & Forecasting

Reconciled actual test results with initially estimated costs and recalculated projected running expenses to ensure long-term feasibility.

Selecting the Best-Fit Environment

Evaluated both performance metrics and overall costs to finalize an infrastructure choice that offers the most advantageous balance.

Production Deployment & Continuous Optimization

Deployed the production environment on the selected platform and implemented ongoing monitoring to maintain peak performance.

Related Works

Building a Topic-Specific AI Chatbot with RAG

Roles: New Business Planning, UX Design, RAG Architecture, Development